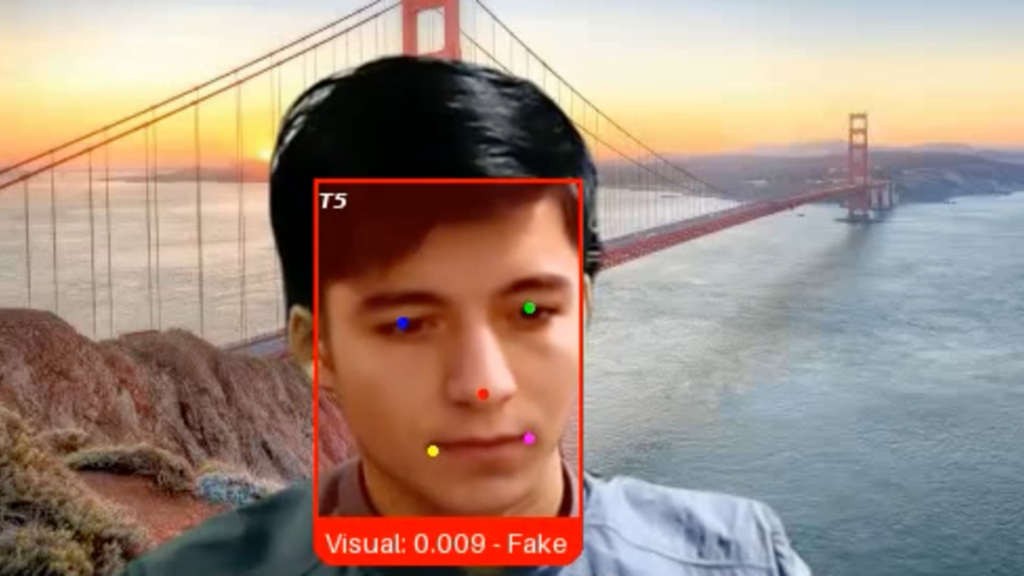

An image provided by Pindrop Security shows a fake job candidate the company has named “Ivan X.” This individual used deepfake AI technology to obscure his true identity, according to Pindrop CEO Vijay Balasubramaniyan.

Courtesy: Pindrop Security

Rise of Deepfake Job Candidates

Pindrop Security, a voice authentication startup, recently encountered a job applicant who stood out among many candidates. This individual, a supposed Russian coder named Ivan, appeared highly qualified for the senior engineering position. However, during a video interview, inconsistencies arose when Ivan’s facial expressions did not match his spoken words, raising flags for the recruiter.

The Threat of Deepfake Technology

Dubbed “Ivan X,” this scammer utilized advanced deepfake software and generative AI tools in an attempt to secure employment. Balasubramaniyan remarked, “Gen AI has blurred the line between what it means to be human and what it means to be machine.” Scammers are now employing fake identities with forged faces and voices to secure jobs, even going as far as swapping faces with real individuals during interviews.

Emerging Threats in the Recruitment Process

Companies have long battled cyber attacks from malicious hackers exploiting software vulnerabilities. However, a new threat has emerged: candidates using AI tools to create fake profiles, generate deceptive resumes, and provide misleading information during interviews. Research by Gartner predicts that by 2028, one in four job candidates globally could be fabricated.

Implications of Hiring Fraudulent Employees

The consequences of hiring a fraudulent job seeker can be severe and vary widely. Once employed, these impostors can deploy malware, steal sensitive data, or even siphon funds from companies. In some instances, they simply collect salaries they would otherwise not be eligible for, a concerning reality for many organizations.

Industry-Wide Surge of Fake Job Seekers

Cybersecurity firms have notably witnessed an increase in fraudulent job candidates. Experts, including Ben Sesser, CEO of BrightHire, note that the issue has escalated significantly in the last year. Remote job opportunities make companies attractive targets, and high-profile organizations have unknowingly hired impostors linked to North Korea, leading to severe financial consequences.

Protecting Against Impostors

The issue of fake candidates extends beyond cybersecurity firms. Lili Infante, CEO of CAT Labs, mentioned that her organization often receives applications from numerous potential North Korean spies, making the recruitment process increasingly complicated. Companies are now turning to identity-verification services to combat this trend.

The Need for Enhanced Security Measures

Despite incidents reported by the Department of Justice (DOJ), many companies remain unaware of the risks associated with fake job candidates. As deepfake technology continues to advance, the problem may worsen. Balasubramaniyan explained that Pindrop used a new video authentication system to identify Ivan X as a fraud, highlighting the growing necessity for robust screening and verification methods in recruitment.

In conclusion, as technology continues to evolve, organizations must adapt their hiring processes to address the emerging threats posed by deepfake candidates. Increased vigilance and the implementation of advanced verification technologies will be critical in safeguarding against fraud in the recruitment landscape.

Deepfake Technology: A New Challenge for Job Recruitment

A recent incident involving a fraudulent job applicant has highlighted the growing threat of deepfake technology in recruitment processes. This challenge is becoming increasingly pertinent as scammers leverage artificial intelligence tools to create realistic identities, blurring the lines between genuine candidates and impostors.

The Case of the Deepfake Candidate

In a notable example, a voice authentication startup was confronted with a candidate dubbed “Ivan X,” a deceptive applicant using advanced deepfake software to mask his identity. While he appeared to be a qualified Russian coder in a senior engineering role, inconsistent facial expressions during a video interview raised red flags.

The Emergence of Fake Job Seekers

The presence of fake job applicants is on the rise, with industry experts estimating that by 2028, one in four job candidates may be fabricated profiles. Scammers are employing AI-generated photos, fabricated employment histories, and even pre-prepared answers for interviews, creating sophisticated identities that are hard to detect.

Security Risks for Employers

Employers face various risks when hiring fake candidates, from cyber-attacks to data theft. Once inside a company, these impostors may install malware or abscond with sensitive information. In some cases, they are merely collecting salaries without any intention of fulfilling their job responsibilities.

Surge in Remote Hiring Threats

Cybersecurity firms and tech companies are particularly vulnerable due to their reliance on remote hiring practices. Many have reported a dramatic increase in fraudulent job applications, as bad actors target organizations that offer flexible work arrangements. This trend has made the hiring process a critical area for security vulnerabilities.

Global Network of Fraudulent Candidates

The issue is not limited to specific countries; it has expanded to include criminal organizations from various nations. Some companies have unknowingly hired impostors linked to malicious states, complicating the landscape of cybersecurity. These workers often use stolen identities to secure remote jobs while sending earnings back to their home countries.

Combating Deepfake Threats

In response to this growing concern, employers are exploring advanced verification technologies. Services aimed at identity verification are becoming essential in filtering out fraudulent applications. Companies are also adapting through improved recruitment processes, with many turning to automated solutions to enhance security.

Conclusion: Evolving Strategies in Recruitment

As deepfake technology continues to advance, organizations must adapt their strategies in recruitment and cybersecurity. The ability to identify and mitigate the risks associated with fake applicants is crucial for maintaining both security and integrity in the hiring process. Companies are recognizing that traditional methods of identification may no longer suffice, making the investment in technology essential for future success.