Emerging Threat: Job Seekers Using Generative AI for Fraud

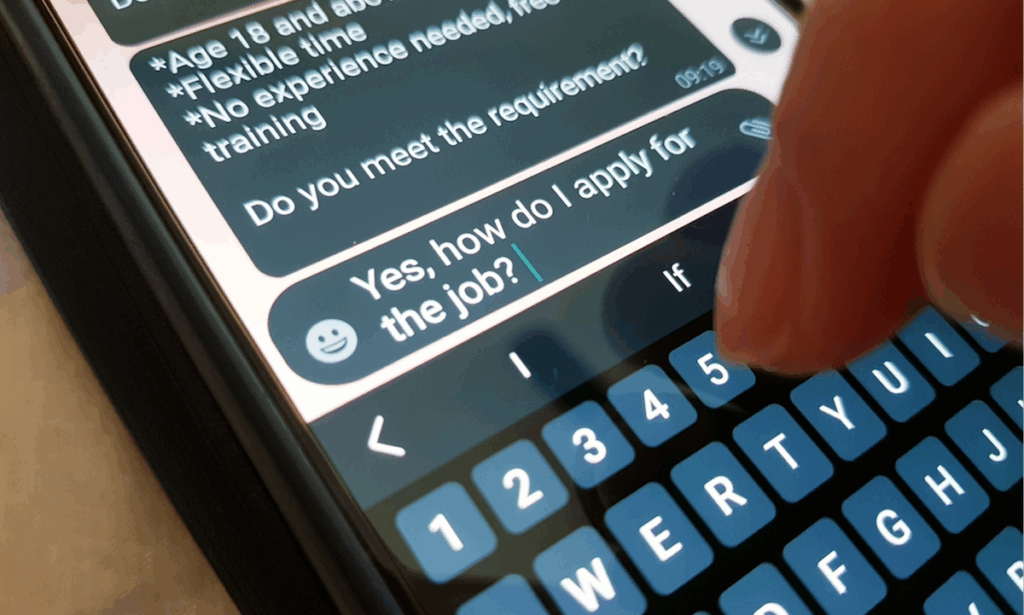

Recent reports indicate that generative AI tools are giving rise to a new type of threat for companies—fraudulent job seekers posing under false pretenses. These individuals utilize sophisticated AI technology to create fake profiles, counterfeit photo IDs, fabricated employment histories, and even deepfake videos for job interviews.

The Tactics of Fraudulent Job Seekers

The primary goal of these fraudsters is to secure remote employment opportunities, often targeting vulnerable sectors such as cybersecurity and cryptocurrency. Once they have gained employment, they exploit access to steal sensitive company data, trade secrets, or funds. In some instances, they may also install malware and demand ransom or divert their earnings to hostile entities, like the North Korean government.

Projected Increase in Fraudulent Job Applications

Research from Gartner points to a startling future where one in four job candidates could be fraudulent by 2028. This alarming statistic underscores the necessity for companies to enhance their hiring practices and implement robust verification measures to protect against such threats.

Strategies for Mitigating Risks

In response to the rise of fake job applicants, many companies have begun deploying advanced solutions. These include partnering with identity-verification firms and utilizing video authentication technologies designed to identify deepfake videos, thereby mitigating the risk of hiring frauds.

Challenges in Remote Hiring

Remote hiring, onboarding, and training present unique challenges for employers, as highlighted in the “Digital Identity Tracker®” report by PYMNTS Intelligence and Jumio. The pandemic accelerated the need for effective digital identity verification solutions, thereby enabling seamless remote hiring and onboarding while replacing outdated manual processes.

AI’s Role in Enhancing Security

To counter increasingly sophisticated cyber threats, businesses are increasingly leveraging AI to enhance security measures. According to PYMNTS, firms are implementing AI-driven solutions to improve their defenses against tactics such as business email compromise (BEC) and malicious links.

Financial Impact of Business Email Compromise

Fraudulent schemes continue to cost businesses significant amounts. The FBI’s Internet Crime Report revealed that in 2023, BEC attacks in the U.S. led to adjusted losses amounting to $2.9 billion annually, while malware attacks resulted in $59.6 million in losses. These staggering figures highlight the urgency for organizations to adopt effective protective measures against ongoing and emerging threats.

Combatting Employment Fraud in the Age of Generative AI

In an era increasingly defined by technological advancements, companies are finding themselves facing an unexpected challenge: job seekers misrepresenting their identities. The advent of generative AI tools has made it easier for individuals to create detailed yet fraudulent profiles.

The Mechanics of Job Applicant Fraud

Fraudsters are leveraging artificial intelligence to fabricate convincing job applications. They are not only generating false profiles but also creating fake photo IDs, employment histories, and even deepfake videos for interviews. This alarming trend allows them to vie for remote job positions, where the risk of detection is notably lower.

Potential Threats to Organizations

Once these deceptive job seekers secure employment, they pose significant risks to organizations. Their motives may include stealing sensitive data, trade secrets, or financial resources. In some extreme cases, they may even deploy malware and extort companies for ransom or redirect salaries to unauthorized entities.

Industry Vulnerability

While scams primarily target cybersecurity and cryptocurrency firms, they are spreading across various industries. According to research, by 2028, a staggering one in four job candidates may be fraudulent. This statistic underscores the critical need for businesses to bolster their vetting processes to mitigate risks.

Preventative Measures and Solutions

To combat the rise of fake job applicants, many companies are implementing enhanced identity verification processes. These measures include collaborating with identity-verification firms for thorough candidate vetting and utilizing video authentication technologies to detect deepfake content in interviews.

The Role of Digital Identity Verification

Remote hiring has introduced complexities, making digital identity verification a vital tool for organizations. During the pandemic, many businesses turned to digital solutions to streamline the hiring and onboarding processes, moving away from manual methods that are often cumbersome and less secure.

Leveraging AI for Enhanced Security

Companies are increasingly adopting AI-driven solutions to enhance their security infrastructure. For instance, advanced systems are being utilized to combat threats such as business email compromise (BEC) and social engineering attacks. Experts emphasize the need for a comprehensive detection strategy to mitigate risks effectively.

Conclusion

The landscape of employment has shifted dramatically with the emergence of generative AI. As companies navigate these new challenges, it is essential to adopt proactive measures to protect their organizations from fraudulent job applicants. Through enhanced verification processes and the strategic use of technology, businesses can safeguard their operations against these modern threats.

Top 10 Digital Nomad Jobs in 2025 for Working Remotely from Anywhere

Top 10 Digital Nomad Jobs in 2025 for Working Remotely from Anywhere Work from Anywhere: Building a Career You Can Take Around the World

Work from Anywhere: Building a Career You Can Take Around the World Living a Location-Independent Lifestyle: Steps to Freedom in Work and Travel

Living a Location-Independent Lifestyle: Steps to Freedom in Work and Travel